Underused and underrated.

When I recently asked five UX research experts about their experiences with card sorting, this common thread emerged.

“It feels like a lost art, especially in the world of continuous iterative development,” said Jack Holmes.

This guide is a deep dive into just about everything you need to know about card sorting in 2025, sure. But it also unravels why a method so simple, yet so powerful still loses out to guesswork — despite the sea of UX research tools at our fingertips.

Throughout this guide, you’ll hear from those five experts I spoke with:

They’ll share helpful tips, practical advice, and lessoned learned from roughy 70 years of combined experience across countless industries and organizations, both in-house and as consultants. Whether you’re a seasoned researcher in the market for an online card sorting tool, a designer who's new to this method and looking for best practices, or somewhere in between, this guide has something for everyone.

{{card-sort-cta-center-dark="/about/ctas"}}

What is card sorting? A definition

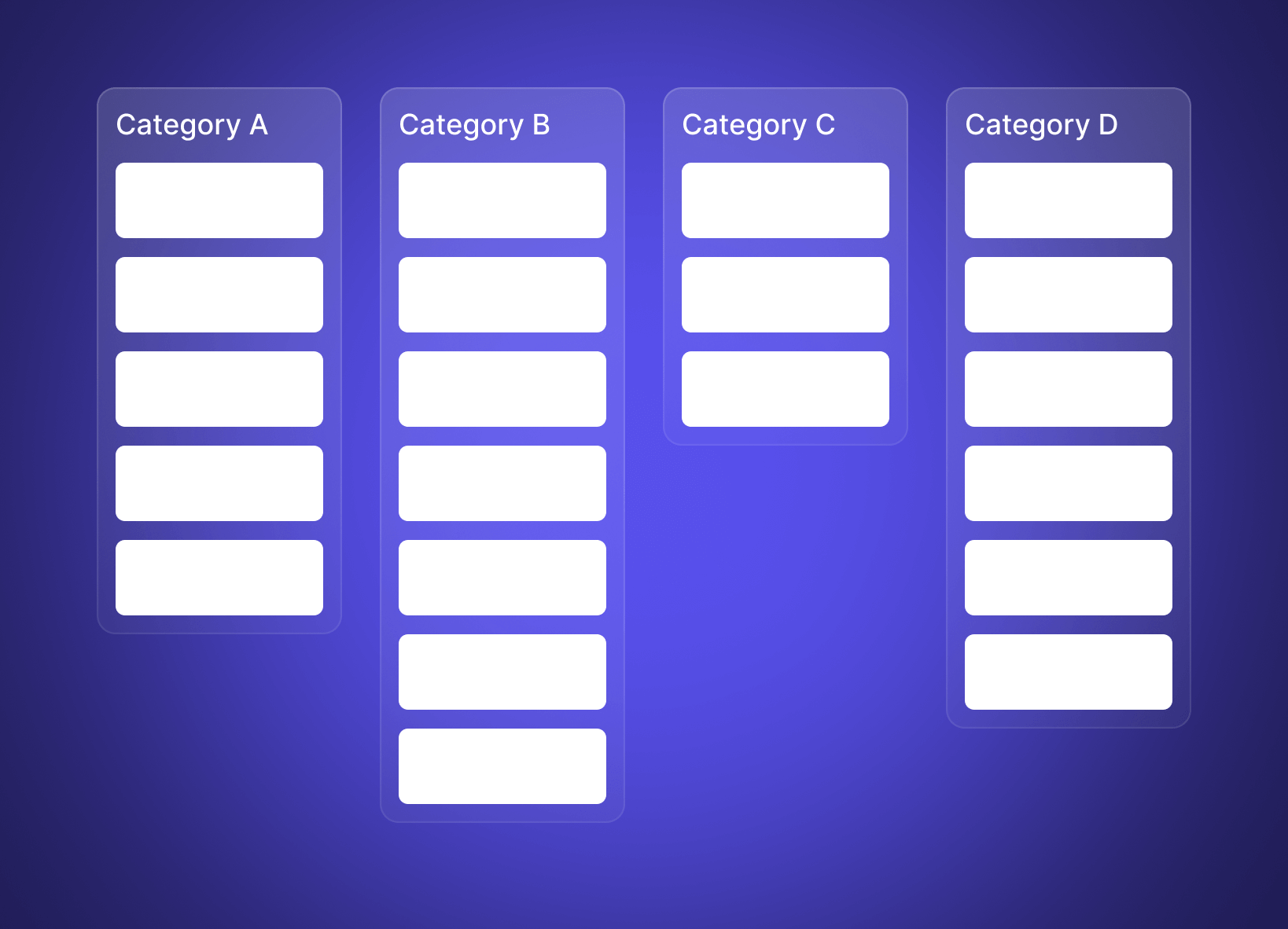

Card sorting is a generative UX research method that helps you understand how people naturally group and label information. Users sort cards into categories — either their own they define (open), ones you define (closed), or both (hybrid). Their choices reveal how they think, so you can design more natural, intuitive user experiences.

Rooted in psychology, this method is today used by UX researchers, designers, product managers, and engineers to guide the information architecture (IA) of the software, apps, and websites they build.

“By asking people to sort cards with different topics or items into groups, we can see how they think about the information,” said Panagiotidi. “This then allows us to organize it in a way that makes sense to them — matches their mental models.”

The power of card sorting lies in its flexibility. Card sorts can provide both qualitative and quantitative insights, the latter of which shouldn’t be overlooked:

“Yes, you can qualitatively describe general groupings that emerge and common naming conventions,” said Stokes, “but you should also take advantage of quantitative analyses like similarity matrices and dendrograms on your card sorts.”

You can conduct card sorting with or without a moderator, and remotely with the software or your choice (more on your tooling options later) or in-person with notecards or sticky notes. And when you do, there are three types of card sorting to consider.

The three types of card sorts

{{types-of-card-sorting="/about/ctas"}}

Open card sorting

In an open card sort, users receive a set of cards with an item listed on each and group the cards into categories they define. This allows users to share what makes sense to them without outside influence.

The main benefit of open card sorting is it offers a window into users' natural thinking and preferences for categorization, either validating the expected or revealing the unexpected.

However, results can vary widely across participants in an open card sort, making it hard to find a common structure. The lack of predefined categories may also overwhelm some participants, leading to skewed or less useful results.

Open card sorts are best for early design exploration. Examples include:

- The initial stages of designing a website or application

- Generating new ideas for categorization and naming conventions

- Organizing products in an e-commerce store or features on a SaaS website

- Categorizing articles on a blog or help docs in a product support center

Closed card sorting

In a closed card sort, users are given a set of cards and predefined categories for sorting. Unlike open card sorting, closed card sorting provides constraints for users to work within.

While this doesn't fully reveal how users would naturally categorize information, it does allow you to assess the effectiveness of a proposed structure from the users’ perspective. Providing predefined categories reduces ambiguity and offers clearer insights into user alignment with the proposed structure.

Still, closed card sorting can limit participants' natural inclinations, potentially masking other options they might prefer. Predefined categories can also introduce bias, pushing participants to fit content into what’s provided rather than suggesting what makes the most sense to them.

Where as open card sorts are ideal for early exploration, closed card sorts are best for later validation. Examples include:

- Validating a proposed information architecture

- Confirming whether users understand your content categorization

- Clarifying and improving unclear or confusing category names

- Comparing multiple sets of predefined categories to understand which users prefer

Hybrid card sorting

A hybrid card sort combines elements of both open and closed study types. You give participants predefined categories, like in a closed card sort, but also allow them to create new categories or suggest changes where they see fit, like in an open card sort.

Hybrid card sorting bridges the gap between open and closed, helping you both validate current categories and discover improvements or alternatives in the same study. This can be more complex to analyze since you're dealing with both researcher-defined and user-defined categories.

Hybrid card sorts are ideal for mid-stage refinement when you have a sense of direction, but there's still flexibility. Examples include:

- You've conducted initial research and have a preliminary structure, but want to ensure it aligns with user expectations and are open to adjustments

- Stakeholders have certain non-negotiable categories, but you still want to gather user-driven insights for other potential categories

When (& when not to) to use card sorting

If you're unsure how to categorize different things (SaaS features, agency services, educational resources, anything) in your website or product, you should probably run a card sort.

"Card sorts are so inexpensive and, relative to some other techniques, low-effort that it's hard to justify not doing one in many situations," said Cooley.

Unfortunately, convincing cross-functional stakeholders — who are often incentivized to ship more, faster, forever — its worthwhile can be the hard part.

"The only time I've been able to justify doing a card sort is when we're doing a big-bang style change," said Holmes. "One time, a card sorting study actually led to the realization that an iterative deployment wouldn't be possible and we needed a larger scale change."

To strengthen your case, keep an eye out for the following red flags, all of which should trigger a card sort:

- A mountain of information. You have a huge set of requirements, and there's no way you can fit everything into a one or two-level navigation.

- Heated internal debate. When you're so close to the work, it's easy to forget what makes sense to you doesn't necessarily make sense to everyone. The fastest way to resolve the latest PM-designer debate? The voice of the user.

- Proof of guesswork. Panagiotidi says evidence of decisions based on guesses, often exposed in design critiques with the right questions, is more than enough to justify a card sort.

- User frustration or struggles. An increase in support tickets where users can't find what they're looking for, or other signs of user frustration that surface in product analytics, are also grounds for card sorting.

At the same time, it's just as important to know when not to turn to card sorting.

If you’re already deep into detailed usability flows or validating visual layouts, card sorting is probably too high-level. It’s best used early when you're shaping or refining structure.

This might mean opting for a similar method instead.

Card sorting vs. tree testing

Card sorting and tree testing seek similar outcomes: better information architectures, better user experiences. But they play different roles in getting there.

While card sorting is used early to generate IA ideas and options from user mental models, tree testing (sometimes called reverse card sorting) is used later to evaluate the effectiveness, or findability, of an actual IA.

Instead of sorting cards into categories, tree test participants are given a hierarchical menu or structure (the "tree") outlining the website or system’s IA. Without the influence of visual design, they're asked to find items or complete tasks by navigating the tree.

A tree test helps you identify which parts of the IA work well and which parts don't, causing confusion or frustration for users. Tree testing is great to use after you've conducted a card sort but before you move on to detailed design and development.

"Too many folks will do an open card sort and call it good," said Stokes. "Instead, consider following up with a closed card sort to test out a proposed H1 hierarchy, and even a tree test after that to assess goal-based success with a new IA."

Because these two methods pair so well, most UX research tools that offer card sorting also offer tree testing. (Like Great Question.)

{{tree-test-cta-center-dark="/about/ctas"}}

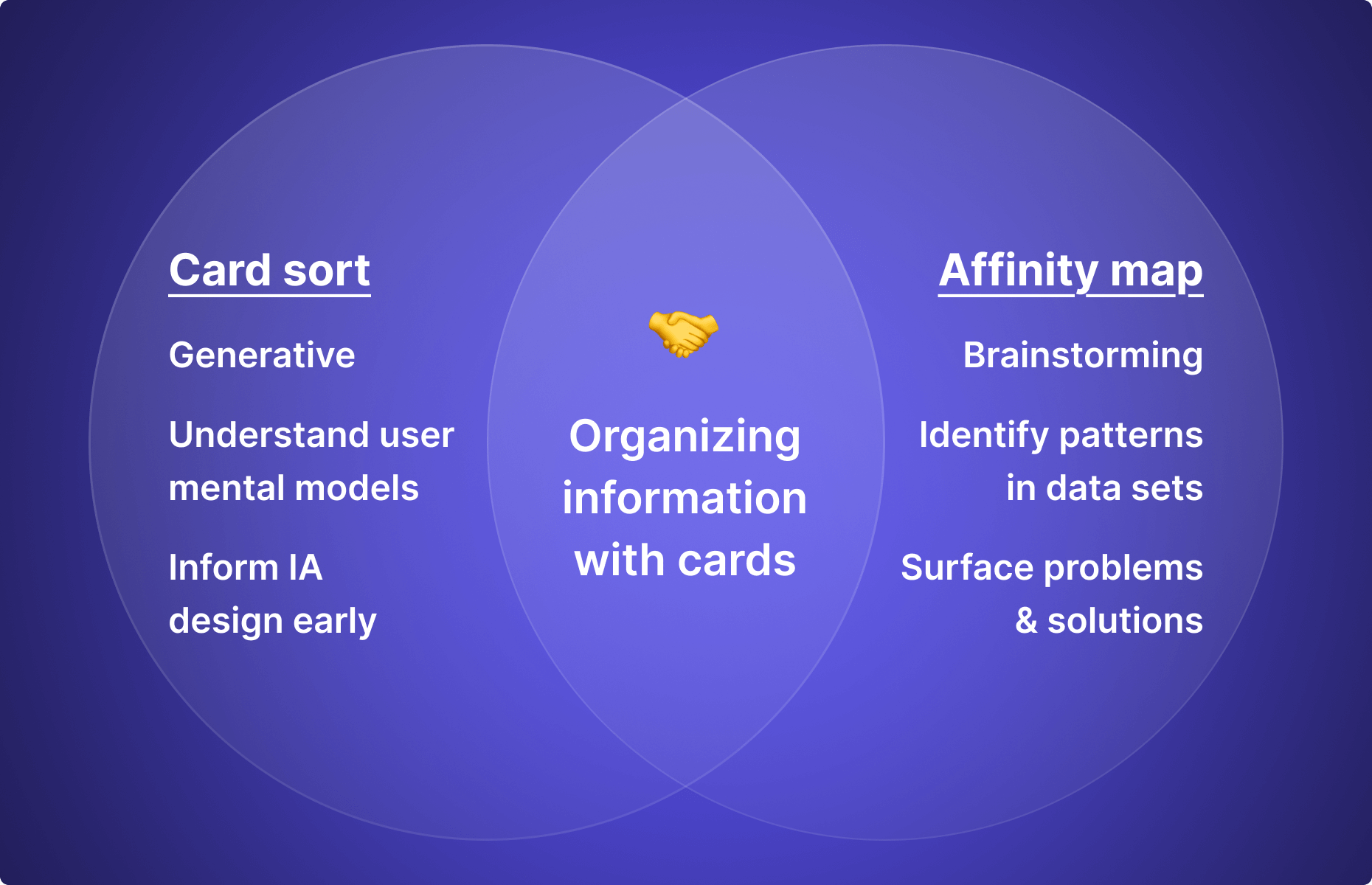

Card sorting vs. affinity mapping

Card sorting is sometimes confused with affinity mapping (or affinity diagramming), since both involve the visual categorization of information with cards.

However, affinity mapping isn't about user mental models or information architecture; it's a collaborative brainstorming exercise used to find patterns in large data sets.

Today, teams often use tools like Miro or Figjam to cluster data — ideas, customer feedback, observations, business challenges — into related groups or themes with digital sticky notes. This can help surface pain points, opportunities, or solutions.

How to conduct a card sort, step by step

1. Define your goal

Like any research study, be clear about what you want to achieve. Are you looking to generate new category ideas for a new IA? Or test the effectiveness of predefined categories? Whatever you decide, communicate what success looks like to your team.

2. Choose the type of card sorting

Decide whether you'll conduct an open, closed, or hybrid card sort, and if the test will be done remotely or in-person based on your needs and available resources. If you’re current UX research toolstack doesn’t offer card sorting, evaluate your options.

3. Prepare your cards

List the items you want to test, either in your tool of choice, or on notecards or sticky notes if being done in-person. These might be names of web pages, product features, use cases, or any other type of information you need to categorize. Make sure each card only has one item or topic.

4. Set up your test

For remote card sorting, make sure your study has clear instructions for participants so the session runs smoothly.

Here’s how setting up a card sort works in Great Question:

- Click New study > Card sort

- Enter your study details (ie: title, goal, participation limit, incentive, screener)

- Enter instructions for users and choose Closed, Open, or Hybrid

- Add your cards and categories and randomize display order for best results

- Click Next > Create & Fund

Easy.

For in-person card sorting, reserve a quiet room with a large table. Provide participants with the cards and, if desired, a recording device.

5. Recruit participants

Aim for a sample that represents your target users. Typically, 15-30 participants is enough, but this can vary based on the scope and type of card sorting you choose. Factors to consider when determining your sample size include risk level, confidence level, and whether or not you need to reach statistical significance.

{{card-sort-sample-size="/about/ctas"}}

Consider adding an incentive upon study completion to thank your participants for their time and insight. Factors to consider when determining your incentive include study duration and the type of participants (ie: B2C, B2B).

{{card-sort-incentive-amount="/about/ctas"}}

To determine the ideal sample size and incentive for your card sort (or any other type of study), try our Research Planning Calculator.

Note: Government employees typically can’t receive monetary incentives due to ethical concerns and legal risks. Consider offering a donation to charity in their name or providing a token of appreciation within agency guidelines instead.

6. Run the card sort

The nice thing about using a UX research platform like Great Question: once you create your study and invite your participants, you can sit back and watch the results flow in — all in the same place.

No need to explain the study over and over again to each participant, wrangle hundreds of notecards, or manually send out incentives. Study instructions are shared with participants, results are safely stored, and your participants are rewarded, automatically.

How to analyze card sort data like a seasoned pro

Your card sort is complete. A mountain of data stares back at you, ready for analysis. This is where the magic happens — don't speed past it.

"I've seen designers and junior researchers spend a lot of time working on the card sort itself, but not enough thinking about how they'll analyze the data or what decisions the findings should support," said Panagiotidi. "This often leads to a pile of interesting groupings with no clear path forward.”

Turning raw data into actionable findings and insights allows you to inform your team's design decisions with confidence. Most online card sorting tools come with built-in reporting and analysis, which helps expedite the process.

Start your analysis by getting a broad overview of the results. Scan for any recurring patterns or trends in the way participants have sorted the cards or the category labels they've proposed during open card sorts.

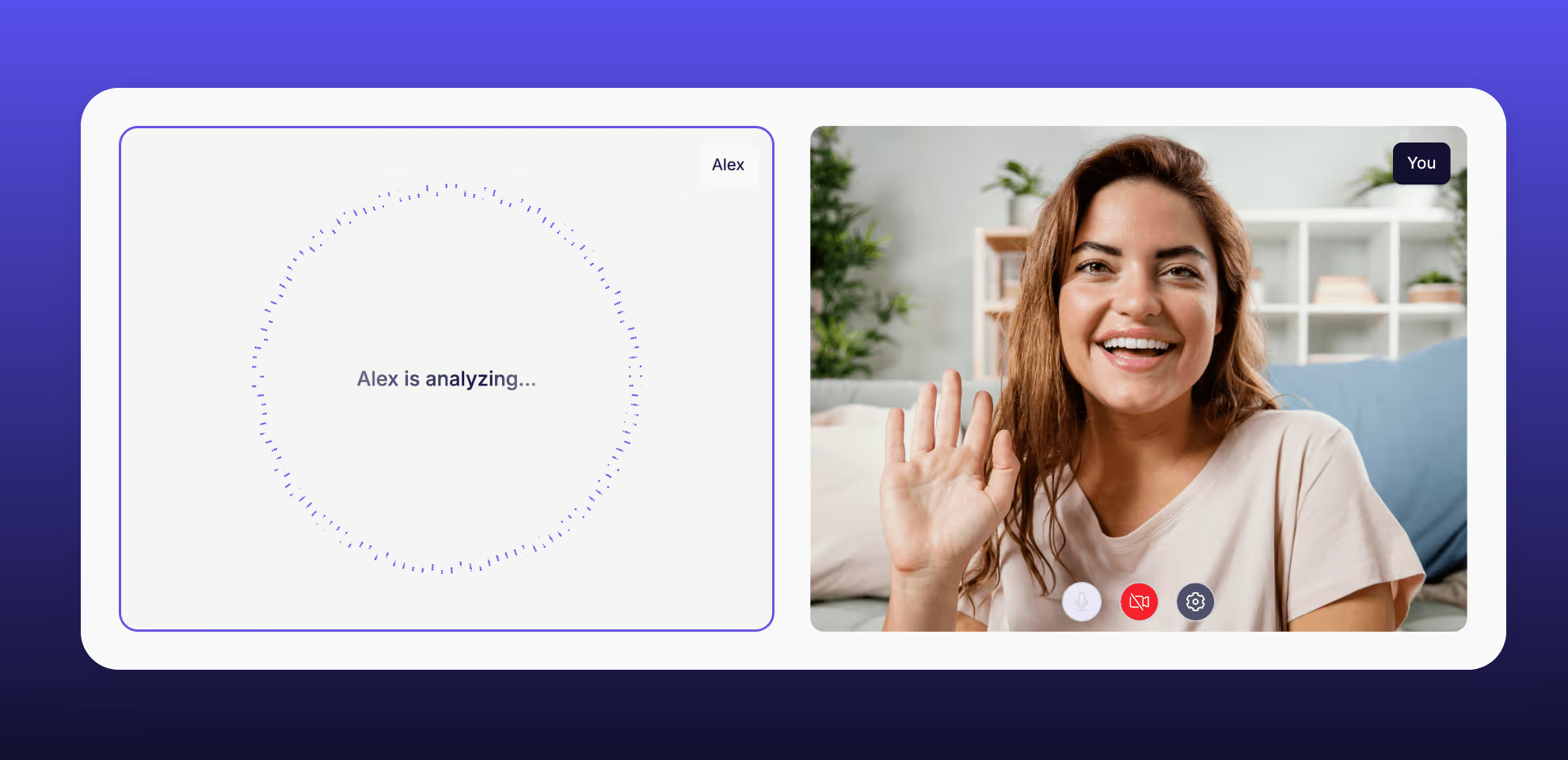

A quick aside on AI: AI isn't a replacement for human analysis or decision-making, but even in its early current state, can be a helpful way to cut through the noise and jumpstart your analysis. For example, Great Question AI makes it easier to spot hidden patterns and misalignments in user mental models.

If you ran an open card sort, review and standardize category labels. Participants might label categories in slightly different ways, whether it’s varied spellings, capitalizations, or phrasing. By standardizing labels, you'll get a clearer picture of the general consensus among participants. Identify common themes or groupings that emerge consistently. Also take note of unique or outlier categorizations, as they can provide insights into different user perspectives.

With closed card sorts, focus on how often participants place each card into the predefined categories. Look for patterns that suggest agreement or disagreement.

To enhance your analysis, here are several techniques to consider:

{{card-sort-data-analysis="/about/ctas"}}

While patterns and clusters provide a structured understanding, qualitative feedback or observations can also be derived through session recordings, especially if you ask participants to think aloud. This ensures your findings and insights are both data-driven and grounded in user behavior and perception.

Real-life examples of card sorting in practice

Enough hypotheticals. These three mini-case studies shared by Cooley, Panagiotidi, and Jansen show the power of card sorting in action.

Designing a company intranet against conventional wisdom

Years ago, Cooley helped Sargento — although the "leader in cheese," a surprisingly small company still working off shared drives — design its first intranet.

“The first rule of websites and intranets specifically is that your information architecture shouldn't follow the org structure,” said Cooley. “People tend not to know which department does what, especially sub-groupings within a department.”

But Sargento proved an exception to the rule. When Cooley ran a card sort with employees, they did consistently group information by department, because they did know which department did what. This was largely the result of a small, local company culture.

“The Marketing Director went to prom with the Senior Shipping Coordinator, their kids went to the same schools, they grew up within blocks of one another. So they just knew each other.”

Findings from Cooley’s card sort defied conventional wisdom, giving her team the confidence to change their initial design direction and prevent a costly redesign later.

Optimizing a sales form to learn why prospects don’t convert

When Panagiotidi was Head of UX Research at Oyster HR, its Sales team was struggling to accurately capture why prospects didn’t convert — vital feedback for the whole company. Preliminary research showed it wasn’t a case of lazy account executives, but a poorly-designed form.

“The categories and sub-options didn’t reflect how reps thought about their conversations, making the form frustrating and time-consuming to complete.”

Because Sales was swamped, Panagiotidi took a “slightly unusual” route: card sorting.

“We asked sales reps to group and label common reasons for lost deals in a way that felt natural to them. This was the first time many had participated in UX research, and they appreciated having their workflow considered and improved.”

The study guided a redesign of the form, removing rarely used categories, merging overlapping ones, and introducing labels based on the Sales team’s input. This led to a significant increase in completion rates, giving Oyster better insight into what was missing product features and opportunities for improvement.

An added bonus: It strengthened their relationship with Sales.

Guiding the IA of new menu structure for healthcare software

Recently, Jansen ran a card sorting study to inform the new menu structure of Nordhealth's software.

Her goal was to show Nordhealth's Product Managers what their user base actually expected when using the product.

“This was a fun one because our PMs all had opinions on how this should be structured and different opinions on what was important.”

Given the spirited debate, Jansen ran two card sorts: one with PMs, one with a large set of users.

“When we presented the user data to our PMs, we then also compared it to their answers as a sort of check for their bias, to show that what they thought was needed, was not what our users were doing.”

No better way to settle a debate than the voice of the user.

Rookie card sort mistakes to avoid & best practices to follow instead

While card sorting is relatively simple and flexible, even the best can get tripped up. Fortunately, our panel of experts has seen just about every mistake in the book (and made many themselves) so you don’t have to.

Not having a clear goal

A successful card sort, like any research study, requires setting and communicating a clear goal. Although that goal should be specific and actionable, Holmes reminds us not to lose sight of the bigger picture.

“Ensure you know what's important to customers and the business. You don't want to be presenting results, and leadership asks where their main revenue generating feature is, but you didn't include it because no customer asked for it.”

Giving cards users don’t understand

Whether it’s because you’re using internal jargon or it’s too vague to mean any one thing, confusion kills.

“When you have cards like this, your participants will interpret them very differently, making your results fuzzy,” said Stokes. “Be specific.”

Panagiotidi also warned against the dangers of jargon, a misstep she sees often and has made herself.

“Product teams are often so used to seeing certain terms that they assume users share the same language. They often don't... unclear labels can confuse them and skew the results.”

To avoid this mistake, Panagiotidi recommends doing a small pilot study.

Giving cards that are leading, repetitive, or too broad

In creating your cards, Cooley emphasizes the importance of breaking information down to the smallest possible unit.

“There's a balance to this, of course, but if you just have a card labeled ‘Insurance,’ you'll never know if people would have put ‘Car Insurance’ and ‘Renter's Insurance’ in different categories, for example.”

She also advises against the use of leading or repetitive terms in card labels, descriptions, and images.

“If you have cards labeled ‘Tuxedo Pants, ‘Denim Pants,’ and ‘Palazzo Pants, you're going to end up with a category called Pants.’ But what might be more appropriate is 3 separate categories like ‘Formal Wear,’ ‘Casual Wear,’ and ‘Resort Wear.’ In short, make sure people are sorting the content and not the words.”

To avoid creating too many similar cards, Holmes always asks himself what he’ll learn from each card. If there is another card that will tell you the same thing, pick one and remove the other.

“I've had clients review cards in the past and say, ‘Isn't this and that kind of the same thing?'‘ and they were right. Remember we're trying to learn about people's mental models, not get them to design the IA for us.”

Be deliberate with your cards. This isn’t a brain dump.

Not enough time for the number of cards

An effective card sort strikes a balance between depth and duration.

In Stokes’ experience, it takes about 30 minutes to sort 50 cards. Keep this in mind as you set expectations with participants for your study.

{{card-sort-study-duration="/about/ctas"}}

On the other hand, Panagiotidi warns against overloading participants with too many cards.

“Be mindful of the number of cards you're asking users to categorize. More than 30–40 cards can lead to fatigue and rushed decisions.”

Be as realistic as possible about the amount of time required to complete your study, and when in doubt, err on the long side. Better to have users finish early than feel rushed.

15 best card sorting tools in 2025

Will notecards or sticky notes work? Sure. So will stone tablets.

“It’s a woefully inefficient way to record and analyze the responses,” said Stokes. “If anyone still does them on paper, I hope they consider a digital alternative.”

Today, there’s an abundance of card sorting tools to choose from. Scouring the internet, I counted 13 purpose-built for UX research and tacked on two common design collaboration tools that can also work if you’re in a pinch.

Note: As a full-time employee at Great Question, I'm obviously biased. Treat this section as a curation of all card sorting tools in market (that I could find) as of June 2025, rather than a ranking of products. Feature and pricing comparisons are based on publicly-available information. The five experts featured in this guide did not endorse or recommend any tools.

Great Question

Great Question is the all-in-one UX research platform loved by Canva, Miro, and more. In addition to our research CRM and repository, we offer all your favorite methods: user interviews, surveys, focus groups, prototype tests, tree testing, and yes — card sorting.

To test with you own users, integrate with your CRM, import a list, or simply share a link. To test with non-users, tap into Respondent’s global panel of 3M+ verified B2B and B2C participants right in Great Question. Segment and screen candidates, manage email outreach, and send automatic incentives anywhere in the world.

Card sorting features

- Run open, closed, or hybrid card sorts in the same place you recruit participants, store insights, and run all your other methods

- Level up your synthesis with agreement matrices, AI summaries, and category insights alongside advanced AI features

- Dig deeper with recording and transcripts, then share the most powerful insights with your team through video highlights and reels

Plans & pricing

- Self-service: Free 14-day trial, then $99 per user per month (billed monthly) / $82.50 per user per month (billed annually — 2 months free). Great for lean teams ready to standardize and scale their research processes.

- Enterprise: Custom pricing available. Great for large orgs who need extra support, scale features, and best-in-class security and compliance.

Try card sorting in Great Question. Or, book a demo with our team.

Optimal Workshop

Optimal Workshop (also known as Optimal) is a user research tool that offers a range of quantitative methods, like card sorting, tree testing, first-click testing, prototype testing, and surveys.

Card sorting features

- Supports open, closed, and hybrid studies

- Automated analysis to uncover insights from card sorts

- Record and replay participants' sessions to gain a deeper understanding

Plans & pricing

- Starter: $107 per month (billed annually) / $199 per month (billed monthly). Includes 1 seat, 2 Live Studies, tree testing, and card sorting.

- Organization: Contact Sales for pricing. Plan starts with 5 seats, includes more methods and onboarding support.

Maze

Maze is a user research platform primarily known for unmoderated research with methods like prototype testing, surveys, live website testing, card sorting, and tree testing, but has recently added moderated user interviews.

Card sorting features

- Supports open and closed studies, but not hybrid

- Analyze with agreement rates, agreement matrices, and similarity matrices

- Ready-made one-page reports

Plans & pricing

- Free: $0 per month. 1 study per month, 5 seats. Card sorting not included.

- Starter: $99 per month. 1 study per month, 5 seats. Only closed card sorting included.

- Organization: Contact Sales for pricing. Unlimited studies and seats. Open and closed card sorting included.

Lyssna

Similar to Maze, Lyssna (formerly known as UsabilityHub) is known for its range of unmoderated methods — card sorting, first click testing, five second testing, live website testing, preference testing, prototype testing, surveys, and tree testing — but has also recently introduced moderated interviews.

Card sorting features

- Supports open and closed studies, but not hybrid

- Analyze with agreement matrices, similarity matrices, and card and category views

- On-demand panel with 690k participants

Plans & pricing

- Free: 2 minute length limit for surveys and tests, including card sorts.

- Basic: $75 per month (billed annually), $89 per month (billed monthly). 5 minute length limit for surveys and tests, including card sorts.

- Pro: $175 per month (billed annually), $199 per month (billed monthly). Unlimited study length.

- Enterprise: Contact Sales for pricing. Unlimited study length.

UserTesting

UserTesting is the oldest (and by many accounts, most expensive) tooling option in the user research space. In 2023, UserTesting acquired UserZoom to expand its platform with more methods and a repository (UserZoom acquired EnjoyHQ, a repository tool, in 2021).

Card sorting features

- Supports open, closed, and hybrid studies

- Analyze with distance matrices, multidimensional scaling, and dendrograms

Plans & pricing

Pricing for UserTesting is not listed on its website.

- Essentials: Contact Sales for pricing. Card sorting and tree testing not included.

- Advanced: Contact Sales for pricing. Card sorting and tree testing not included.

- Ultimate: Contact Sales for pricing. Card sorting and tree testing included.

Dscout

Dscout is an experience research platform that offers seven research methods: usability testing, concept testing, field studies, diary studies, surveys, interviews, and card sorting.

Key card sorting features

- Supports open, closed, and hybrid studies

- Customize tests with category and card randomization, skip logic, and question piping

- Recruit from Dscout's panel or via its "partnerships with leading qualitative research panel providers"

Plans & pricing

Pricing for Dscout is not listed on its website.

- Core: Contact Sales for pricing. Includes card sorting.

- Select: Contact Sales for pricing. Includes card sorting.

- Enterprise: Contact Sales for pricing. Includes card sorting.

UXtweak

UXtweak is a user research tool that offers a range of unmoderated methods, from card sorting and tree testing to five second testing and preference testing, and recently added moderated interviews.

Card sorting features

- Supports open, closed, and hybrid studies

- Analyze with similarity matrices, dendrograms, and other visualization tools

- Randomize cards and categories and add images and skip logic

Plans & pricing

- Free: 1 user. Card sorting included with limitations: 1 active study with up to 10 responses and 20 cards per month.

- Business: $92 per user per month (billed annually), $125 per user per month (billed monthly). Card sorting included without limitations.

- Custom: Contact Sales for pricing. Card sorting included without limitations.

Useberry

Useberry is a UX research platform specifically for unmoderated studies: card sorting, tree testing, first click testing, five second testing, preference testing, and surveys.

Card sorting features

- Supports open and closed studies, but not hybrid

- Analysis features either not available or not listed on Useberry's website

Plans & pricing

- Free: 1 seat, add up to 20. Card sorting limited to 10 responses per month.

- Growth: $67 per month (billed annually), or $79 per month (billed monthly). 1 seat, buy up to 20. Card sorting limited to 300 responses per month but you can buy up to 2,000.

- Enterprise: Contact Sales for pricing. Starts with 5 seats and 50,000 responses per year.

UX Metrics

UX Metrics is an affordable point solution for card sorting and tree testing.

Card sorting features

- Supports open, closed, and hybrid studies — moderated or unmoderated

- Includes frequency rankings and agreement scores, but export to XLS required for deeper analysis

Plans & pricing

- Free: 3 free card sort participant results included, upgrade required for more.

- Pro: $49 per month. Unlimited card sort studies and participant results.

Userlytics

Userlytics offers a range of moderated and unmoderated research methods, including card sorting and tree testing.

Card sorting features

- Supports open, closed, and hybrid studies

- Analysis functionality is unclear: "advanced metric system"

- Panel features 2 million participants from 150+ countries

Plans & pricing

With five plans across two categories, Userlytics' pricing is the most complicated of any tool I found. Card sorting is included on all plans, with "flexible monthly payment plans" available upon request.

For access to the Userlytics panel:

- Project-based: No subscription required, just a minimum purchase of 5 participant sessions. Pricing not listed.

- Enterprise: As low as $34 per session — "annual plan with volume discounts"

- Limitless: Contact Sales for pricing.

For self-recruitment (bring your own participants):

- Premium: $699 per month, billed annually ($8,388) unless otherwise requested.

- Advanced: $999 per month, billed annually ($11,988) unless otherwise requested.

UXArmy

UXArmy offers a range of moderated and unmoderated methods, with participant recruitment and analysis functionality as well.

Card sorting features

- Supports open, closed, and hybrid studies

- Analyze participants by demographic data and abandon rates

- Identify confusing cards with movement paths and categorization scores

Plans & pricing

- Free: 1 full seat, 20 monthly credits. Full access to card sorting and tree testing on al plans.

- Starter: $25 per month (billed annually), $29 per month (billed monthly). 1 full seat, 25 monthly credits.

- Pro: $82 per month (billed annually), $99 per month (billed monthly). 3 full seats, 70 monthly credits.

- Organization: Contact Sales for pricing. Unlimited seats, custom credits.

UserBit

UserBit offers UX research and design tools specifically for agencies and freelancers.

Card sorting features

- Card sorting is supported, but website isn't clear if that includes open, closed, and hybrid studies

- Analyze results with similarity matrices and heatmaps

Plans & pricing

- Free: Up to 2 studies per method (including card sorting).

- Usage based: $30+ per month. Up to 5 studies per method (including card sorting).

- Unlimited: $199 per month. Unlimited team members, projects, and studies.

kardSort

kardSort is a point solution for card sorting, primarily used in academia.

Card sorting features

- Supports open, closed, and hybrid studies

- Doesn't include built-in analysis functionality, but supports data export to CSV, SynCaps V3, and Casolysis

- Integrated pre- and post-study questionnaires

Plans & pricing

- Free for everyone, forever

Non-research tools with card sort functionality

Broader design and collaboration tools like Miro and Figma also offer their own card sorting templates. However, due to limitations in participant recruitment, study management, and research analysis, these are only ideal as short-term solutions. Long-term, a tool purpose-built for researchers is recommended.

The bottom line

Card sorting is a simple, powerful method used to learn how users naturally organize information. By better understanding users’ mental models, teams can design and build better products with more intuitive information architectures.

And yet, in the 70 years of shared experience between the five experts I spoke to, they see this method often go overlooked. Cooley summed up the case for card sorting best.

“Many teams are confident they know how things should be organized,” said Cooley. “Sometimes they're right. More often, they’re not. Why waste time, money, and energy on conference table debates when you can just run a study and find out?”

—

Thanks to Danielle Cooley, Jack Holmes, Maria Panagiotidi, Odette Jansen, and Thomas Stokes for contributing to this guide.

.avif)